In this post I’m going to describe a testing approach you can take to reduce the amount of time spent writing tests, whilst gaining enough confidence in your code to ship it. I’ll be focusing specifically on testing APIs, as they often are challenging to test — sometimes business logic and IO are interweaved.

And I’ll be showing how functional programming is particularly adept at cleanly separating IO from logic, to help with good testing.

API

The examples I have in this post are all based on an example API which uses JWT(JSON Web Token) for authentication and a database to retrieve data. Firstly I’m going to describe the API functioning as a way of discussing how to test that.

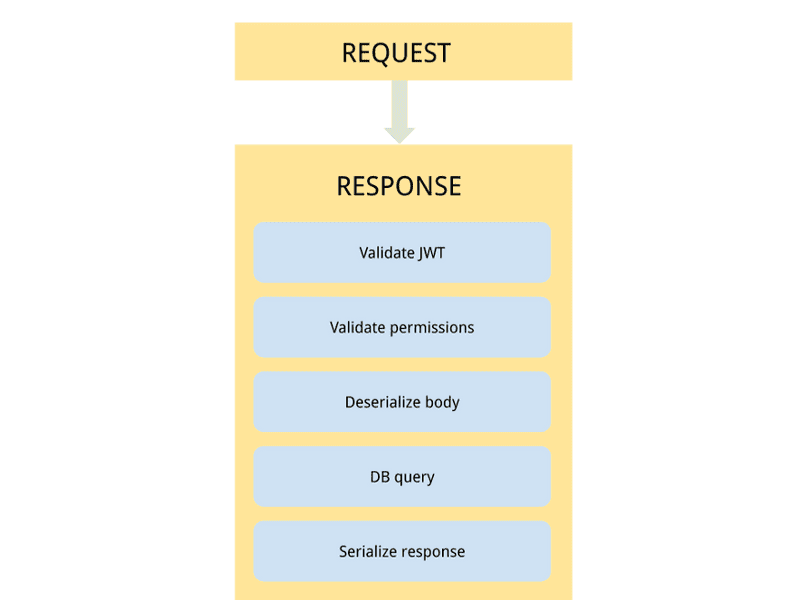

Let’s look at an example request coming to our API and what it needs to do to serve a response.

Key IO activities for this endpoint are: validating the user’s identity (Validate JWT against an IdP), their access rights (Validate Permissions using a database query), fetching data from a database (another database query) and logging the journey along the way. What catches the eye is that preparing a response requires a lot of IO interaction.

The question you want to ask yourself is: how should you write your code in a way that would best support testing of this IO-heavy API?

The answer is to make your code follow the “Functional Core, Imperative Shell” pattern, and then make the “Imperative Shell” testable by separating description from interpretation.

Functional Core, Imperative Shell

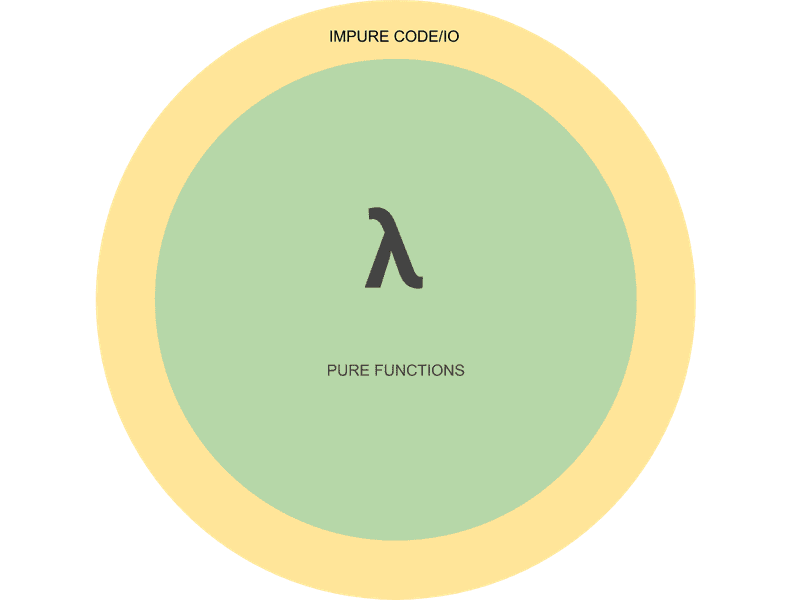

One way to make your application more testable is to use the “Functional Core, Imperative Shell” approach. This means writing your code in terms of pure functions, and pushing impure IO code to the outer edges of the program.

One of the biggest advantages of functional programming is that it is very explicit about whether your code performs IO (i.e. impure or side-effectful code). Let’s say you wanted to validate a JWT, your initial signature might look something like this:

def validateJwt(token: JwtToken): Either[Error, Claims]When implementing, you soon realize that you need to perform a call to a JWKS (JSON Web Key Set) cache which has the following signature:

def getKeys: IO[Set[Jwk]]Using types force you to make a decision: you either modify the return type of validateJwt to include IO, explicitly telling us that this function needs to talk to the outside world:

def validateJwt(token: JwtToken): IO[Either[Error, Claims]] = getKeys.map(keys => {

/* perform validation using keys */

})Or you can keep the non-IO return type by passing the keys when validating a token:

def validateJwt(token: JwtToken, keys: Set[Jwk]): Either[Error, Claims]The latter approach is more testable. You can test the validation logic without having to depend on an external service to give you a set of JWK. You pushed the impure code — making an http call — further outside, keeping your application core side-effect free. You can also apply local reasoning when looking at your validateJwt code (you don't have to think about the state of Jwk cache or http calls it makes) all you have is a token and a list of keys, so it's easy to understand how the code will behave.

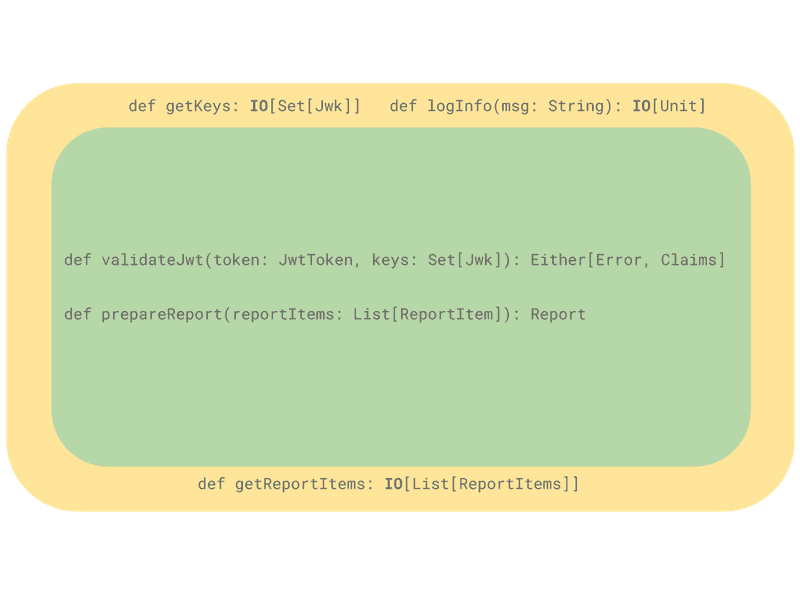

You can apply this process to the rest of your code and it will naturally push side-effectful code into the “Impreative Shell”. This is how it might look like for the example API endpoint from above:

Separating description from interpretation

The second step I’m recommending is to make that imperative shell testable (“Imperative Shell”).

By keeping all of our side-effectful code at the edges of the application and keeping our core “pure”, we are already making it more testable and easier to reason about.

In many cases this is not sufficient. This approach will take us as far as testing individual functions, but it will not allow us to test how components will interact with each other. For example, in our API you will need something to call the def getKeys: IO[Set[Jwk]] function and feed its output to validateJwt.

How do we test this kind of interaction then? We can do so by separating the description of the program from its interpretation. The main idea is that we encode outside-world interactions in a generic way and then provide two interpretations: one for the real interactions and another one for test interactions.

It is still a developing field but the most popular way to do this is “Final Tagless” or “Free Monad”. I have chosen to demonstrate the “Final Tagless” approach because it’s more flexible and requires less boilerplate code. Both approaches start with creating a Domain Specific Language (DSL) for your effectful code. This is how it looks with Final Tagless:

trait JwksDsl[F[_]] {

def getKeys: F[Set[Jwk]]

}

trait LoggerDsl[F[_]] {

def info(msg: String): F[Unit]

def warn(msg: String): F[Unit]

def error(msg: String): F[Unit]

}After DSL is defined we can start using it to write our application code:

class Program[F[_]: Monad](jwks: JwksDsl[F], logger: LoggerDsl[F]) {

def endpoint(request: Request): F[Response] = for {

jwksKeys <- jwks.getKeys

authToken = extractToken(request) // oversimplification

validationResult = validateJwt(authToken, jwksKeys)

_ <- logger.info(s"Token validation result $validationResult")

// the rest of the code to prepare the response

} yield response

}DSL is defined in terms of an abstract type constructor F[_] meaning that the return type has to be "wrapped" in some kind of an effect F without specifying the exact type. Now that the F type is not "fixed", you are free to replace it with different concrete types for testing and for running.

Test Implementation

For testing you might not only be interested in the result of the computation but we often would like to see what side-effects it will have to perform. For example, it’s often necessary to check that the proper messages were logged. This is what the testing implementation looks like:

class JwksTest(keys: Set[Jwk]) extends JwksDsl[TestProgram] {

def getKeys: TestProgram[Set[Jwk]] = wrapped(keys)

}

class LoggerTest extends Logger[TestProgram] {

def info(msg: String): TestProgram[Unit] = wrapped(LogInfo(msg))

def warn(msg: String): TestProgram[Unit] = wrapped(LogWarn(msg))

def error(msg: String): TestProgram[Unit] = wrapped(LogError(msg))

}Here a TestProgram type is used which allows recording would-be side-effects using a WriterT monad:

sealed trait SideEffect

final case class LogInfo(msg: String) extends SideEffect

final case class LogWarn(msg: String) extends SideEffect

final case class LogError(msg: String) extends SideEffect

type TestProgram[A] = WriterT[IO, List[SideEffect], A]

def wrapped[A](value: A): TestProgram[A] =

WriterT[IO, List[SideEffect], A](IO.pure((List(), value)))

def wrapped(se: SideEffect): TestProgram[Unit] =

WriterT[IO, List[SideEffect], Unit](IO.pure((List(se), ())))wrapped is just a convenience method to create a TestProgram using either a value or a side-effect. This approach allows us to test the behavior of the application as a whole without having to write integration tests. This is what a test typically looks like:

it should "authorize a user and create an audit entry" in {

val program = new Program(new JwksTest(jwks), new LoggerTest()) // this typically sits outside

val request = ??? // prepare request

val (sideEffects, response) = program.endpoint(request).value.run.unsafeRunSync

val status = response.map(_.status)

val logs = sideEffects.collect({ case LogInfo(msg) => msg })

status shouldBe Some(Status.Ok)

logs.count(_.contains("Authentication successful")) shouldBe 1

}Tests treat the API as a black-box function from Request to Response with side-effects. The above example tests successful authentication of a user.

When Request is sent, implementation details for creating a valid Response are not important as long as it satisfies the contract of our API.

What needs to be tested here is whether a valid token will be accepted and the audit entry will be created in logs. It is irrelevant whether it called validateJwt to validate a token, or some other function, or even delegated the whole thing to an external library. You are testing the behavior, not the implementation.

Notice how tests don't check if LoggerTest was called with a certain method, all they test is that LogInfo side-effect was produced. Whether Logger does it, or the endpoint itself or even Jwks is irrelevant to make sure that when the method is called it will do what it was designed to do.

Production Implementation

For the code to be executed in a production setting a real implementation needs to be provided. Here are examples for the DSLs created above:

class Jwks(httpClient: HttpClient, jwksUrl: Uri) extends JwksDsl[IO] {

def getKeys: IO[Set[Jwk]] = httpClient.get[Set[Jwk]](jwksUrl)

}

class Logger extends Logger[IO] {

private val logger: Logger = Logger(LoggerFactory.getLogger("analytics-api"))

def info(msg: String): IO[Unit] = IO(logger.info(msg))

def warn(msg: String): IO[Unit] = IO(logger.warn(msg))

def error(msg: String): IO[Unit] = IO(logger.error(msg))

}Now that the application code is fully tested including the HTTP layer and wiring between components, there is still a part of the code untested, the production DSL implementations. This is the code that does HTTP requests, reads database or memory. These implementations need to actually interact with the outside world to be tested. Let’s take another look at the production implementation of JwksDsl:

class Jwks(httpClient: HttpClient, jwksUrl: Uri) extends JwksDsl[IO] {

def getKeys: IO[Set[Jwk]] = httpClient.get[Set[Jwk]](jwksUrl)

}To test it you’d have to supply it with an HttpClient and with Uri, call getKeys and inspect the result. This can be a very simple integration test which calls the real endpoint and checks that it gets some JWKs back. Since the interface is so simple there is not much to test, so the integration tests are very quick.

Writing good DSLs

The simpler the DSL the easier and the faster it is to test, but it is important to find the right level of abstraction. Taking the previous approach to the extreme you could have a low-level HttpDsl with http mehods like this:

trait HttpDsl[F[_]] {

def get[T](uri: Uri): F[T]

def post[P, T](uri: Uri, body: P): F[T]

// ... and so on

}and then use it instead of JwksDsl in your code:

class Program[F[_]: Monad](http: HttpDsl[F], logger: LoggerDsl[F], jwksUrl: Uri) {

def endpoint(request: Request): F[Response] = for {

jwksKeys <- http.get(jwksUrl)[Set[Jwk]] // instead of jwks.getKeys

// the rest of the code to prepare the response

} yield response

}Looks like an improvement over less generic JwksDsl at a first glance, since you only have to test the HttpDsl implementation once and then reuse for all of your http requests. The problem here, is that this approach will not give you enough confidence that the code works correctly. While testing higher-level JwksDsl will hit the real endpoint with real headers, and will confirm that it can deserialise the response into the case classes. You would not have the same confidence if you were to test HttpDsl on its own.

On the other hand it is important to limit a DSL to the external interaction only and limit its functionality as much as possible. For example you could have combined JWT verification logic and got JWKs like this:

trait JwtVerificationDsl[F[_]] {

def verify(token: String): F[Either[String, Claims]]

}Since it needs to perform an HTTP request it can only be tested at an integration test setting. This means it will have to call the jwks endpoint even if you just want to verify that it correctly extracts claims from a token.

Pros & Cons

Pros

- Testing what matters.

This approach allows you to test what matters to the user of the API.

By having the tests on theRequest/Responselevel it's easier to translate requirements into tests.

These kind of high-level tests are a good source of documentation — it is clear what the endpoint does just by looking at the input and output. - Fearless refactoring.

It might look like this kind of approach can lead to spaghetti code, since there are no tests for individual classes guiding the design and interactions between components. In reality, the combination of functional programming which promotes small pure functions and freedom to move things around leads to constant small improvements to the codebase.

How many times have you thought twice about moving a method between classes or just renaming it in fears that mock-based tests would break? Even after tests are fixed, how confident are you that you haven’t introduced bugs into the tests themselves? - Clearly defined application boundaries.

It’s easy to look at the DSL definitions and see how the application interacts with the outside world. - Bigger coverage with fewer tests.

The example test covers extracting the token from a request, verifying it with JWK, extracting the claims, and testing how these parts are wired together. This is like having an integration test which runs at the speed of a unit test.

Cons

- Since

Request/Responsetypes are coming from an http library it ties your tests to that library. - Tests are not isolated, so a single bug can cause multiple tests to fail.

It’s important to note, that one testing approach doesn’t preclude the other. You can still have classic unit tests which test individual functions/methods where the additional overhead of writing application-level tests is not worth it.

Conclusion

To recap:

- Identify and push IO interactions to the edges of your application

- Use either Final Tagless, Free Monad or any other approach to allow separation of program description from its interpretation

- Provide test interpretation and use unit tests to test it

- Provide production interpretation and use integration tests talking to real systems to test it.